The goal of this article is to share the experience of how to scale a cloud solution (AWS) to handle 5M requests per minute (83K req/sec). (Read more: Scaling Amazon Aurora MySQL)

Load Testing

To find bottlenecks, load tests were used— 50 tests were held. Every test was started with minimum resources on the cloud to monitor how it scales.

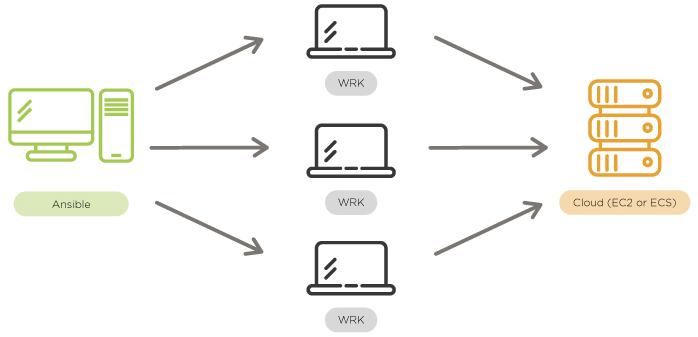

Apache Bench and WRK were used for load testing and Ansible to control bombers.

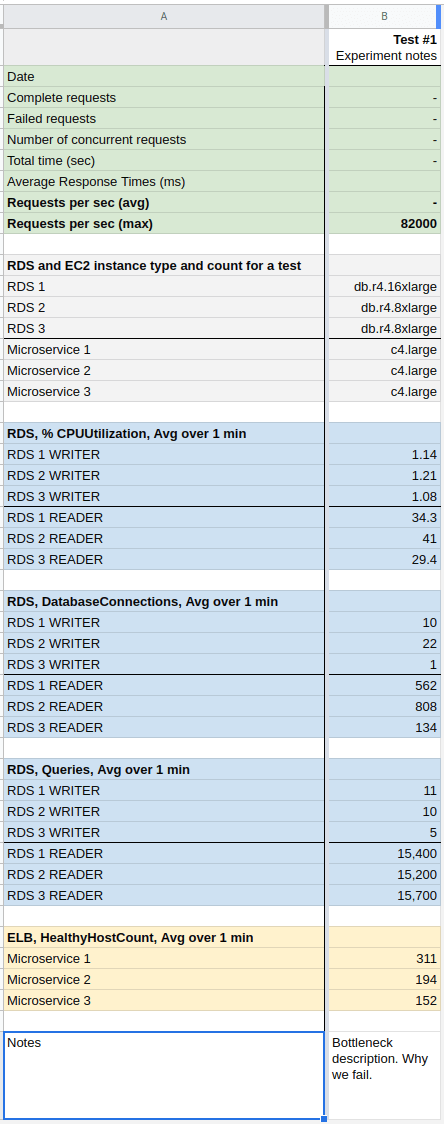

Metrics

We logged each test to Google Sheets with metrics, changes, and a description of bottlenecks. CloudWatch provided all the metrics we required.

ELB/ALB

- HealthyHostCount

- RequestCount

RDS

- CPUUtilization

- DatabaseConnections

- Queries

Microservices on EC2

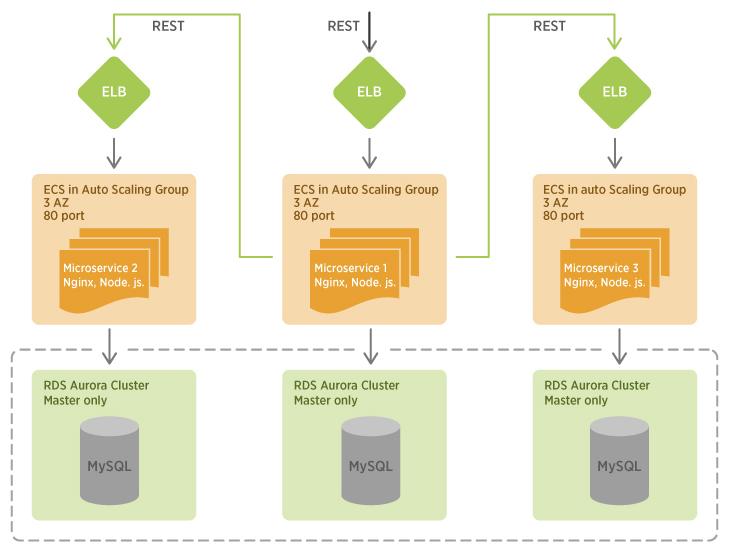

Let’s start with a simple Microservice architecture on EC2 instances (without ECS). Each microservice was placed on a single EC2 instance. ELB and Auto Scale Group were used for load balancing between instances and for adding new instances on high load.

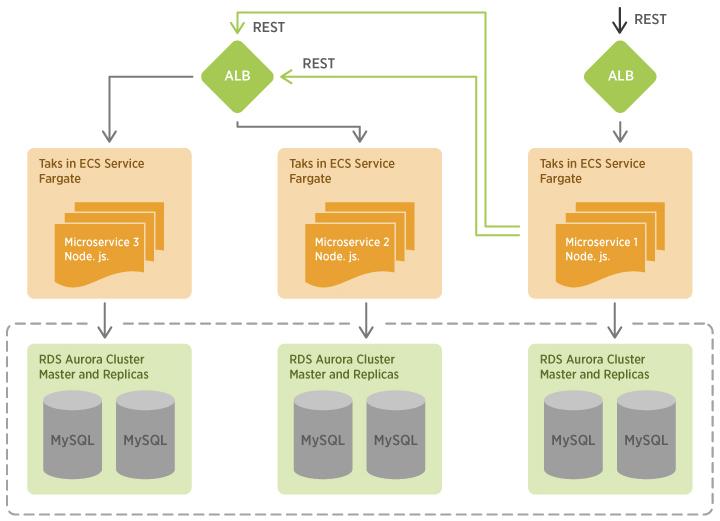

In the test, we used three Node.js microservices with three RDS clusters for each service. Microservice 1 receives all requests, authorizes users with Microservice 2 and fetches some data from Microservice 3.

Here are some bottlenecks we found:

Babel — JavaScript compiler

Some microservices uses Babel (babel-node) to compile and execute files. It already uses the latest Node.js version which supports everything we need. We just added ESM to leave ES modules.

AWS stolen CPU

On constant load, CloudWatch shows that CPU load drops from 90% to 40%, but Htop shows 100% CPU load. Auto-scale policy can’t handle this because of the incorrect metric.

This problem is known as “AWS stolen CPU.” If your service depends on constant high CPU load, you should move away from T2 (CPU Credits and Burstable Performance) to instances like C4 or C5 (Compute Intensive).

AWS Limits

657 instances of C4.large type were used to reach 82,000 req/sec. Then we found this was the limit of subnet size. The easiest solution is to use a more powerful instance type, such as C4.4xlarge.

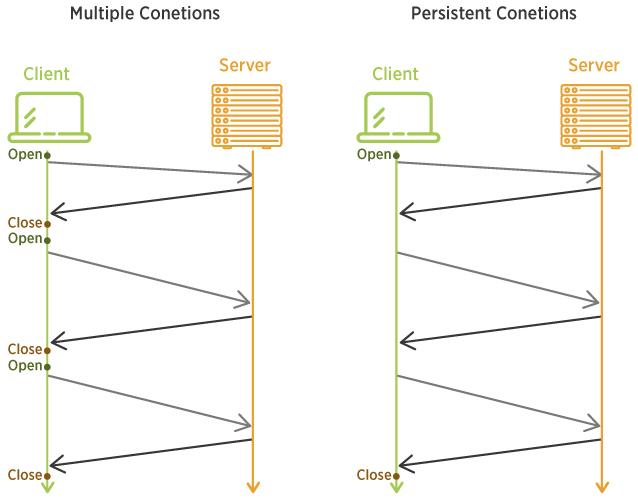

Inter-service communication

On C4.4xlarge, I ran Node.js with PM2 cluster mode and 16 processes. But I still couldn’t reach even 40% CPU load.

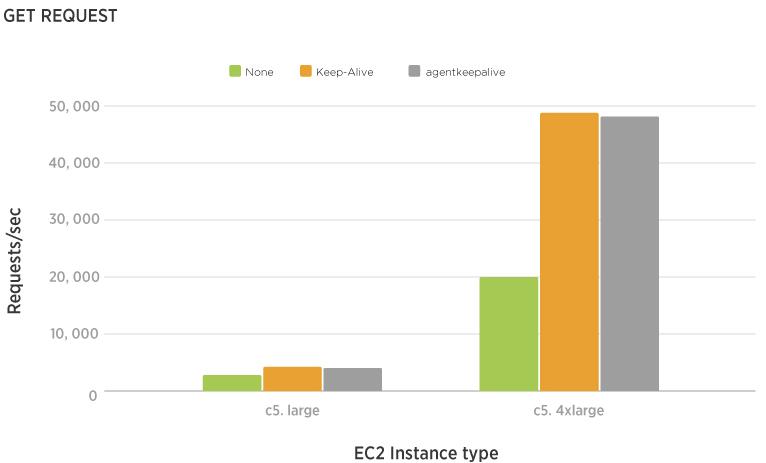

Communications between microservices were REST API over HTTP/1.1. The problem was due to the extra overhead caused by the TCP protocol.

The solution was to use the KeepAlive flag and reuse TCP connections for HTTP requests. Other possible solutions would be to use REST over HTTP/2 or gRPC (HTTP/2 under the hood).

So we successfully reached the goal with microservices on EC2. Now let’s try serverless microservices with ECS Fargate.

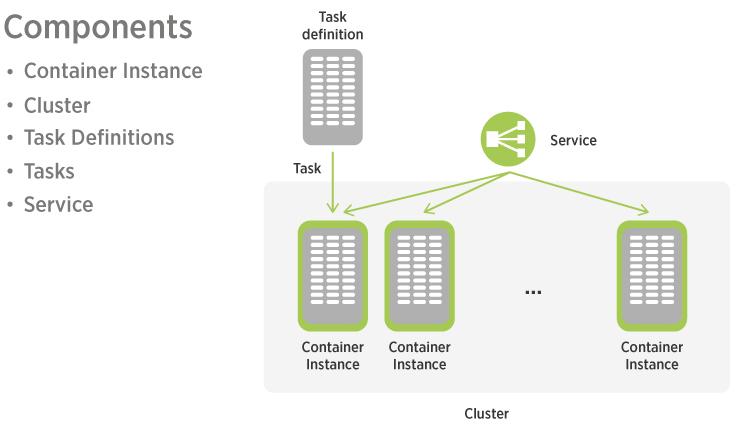

Microservices on ECS Fargate

ECS is a container orchestration service managed by AWS. Fargate is a compute engine that allows us to set resources for each service and forget about servers. This is a serverless microservice.

Example of Serverless Microservice architecture on ECS Fargate.

ECS Fargate

Pros

- Easy to start with a simple configuration

- You don’t care about OS, security patches, support, AWS configuration

- Container Image Repository.

Cons

- One task (container) is limited to 4 vCPU and 30 GB RAM

- Lower performance compared to EC2

- Increasing Fargate limits in our case took a couple of weeks.

EC2 vs. Fargate performance and cost

EC2

- 82,000 req/sec

- 657 C4.large (2 vCPU)

- 2 vCPU, 7.5 GB (C4.xlarge), $0.199 per hour

Fargate

- 58,667 req/sec

- 724 tasks (4 vCPU)

- 4 vCPU, 8 GB, $0.197 per hour

Fargate gives us serverless microservices, but it has a performance and cost penalty in comparison to an EC2 instance.

Conclusion

- Your AWS VPC subnet should support your future workloads.

- Check your AWS EC2 and Fargate limits up front.

- Enable KeepAlive for all interservice communications (can dramatically increase requests performance).

- Never use Babel for production backend (can add bottlenecks to asynchronous operations and memory leaks).

- Don’t use T2/T3 for CPU intensive tasks.

- Don’t use Nginx in front of a microservice — an extra layer that you need to properly configure; it can slow down your req/sec.

- Use JWT where you can.

TAKEAWAYS

This article shares our knowlege which we use on practice. See our last case study: Increasing the scalability of a cloud-based system for IoT products. If you need some advice with our cloud system, Sirin Software has expertise in this area and is ready to share its knowledge with you. Contact us and we’ll be happy to find the best solution together with you!