A new uncommon solution to create containerized apps

Kubernetes or K8s for short is an open source toolkit for building a fault-tolerant, scalable platform designed to automate and centrally manage containerized applications. The platform itself can be deployed within almost any infrastructure – in the local network, server cluster, data center, any kind of cloud – public (Google Cloud, Microsoft Azure, AWS, etc.), private, hybrid, or even over the combination of these methods. It is noteworthy that Kubernetes supports the automatic placement and replication of containers over a large number of hosts.

By default, Kubernetes uses Docker to run images and manage containers. Nevertheless, K8s can use other engines, for example, rkt from the CoreOS. In general, Kubernetes is an easier-to-configure, more mature and functionally rich analog to the Docker Swarm.

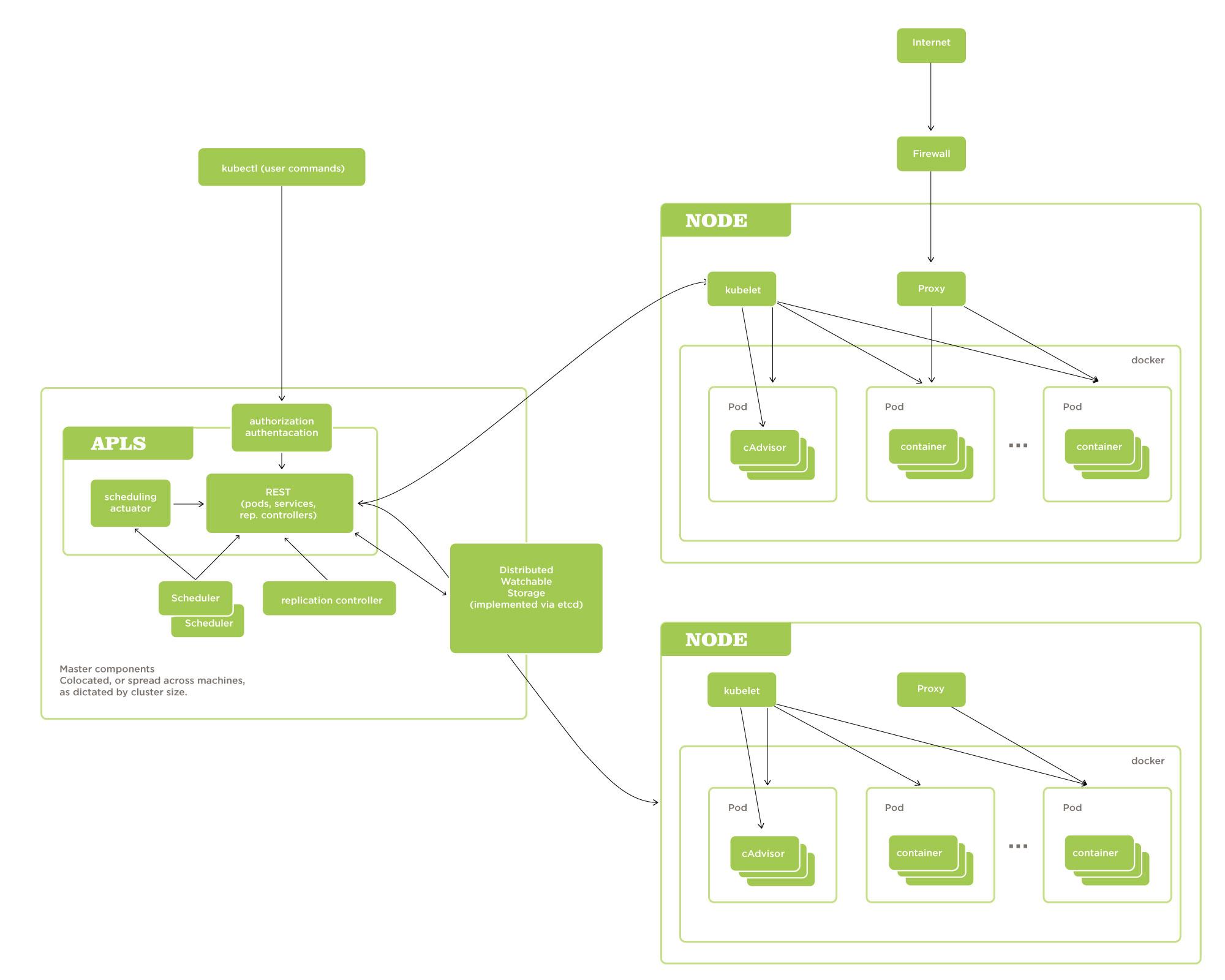

Architecture

To build a platform based on Kubernetes, you will need physical or virtual servers that perform the following roles:

- Node is a separate host in the Kubernetes cluster, which is used to start worker threads and containerized applications;

- Minion node – “slave” node, used only for deploying and launching the application containers;

- The master node is the node on which the Kubernetes control modules are running (note that nothing prevents the same node from being a minion if resources allow it). In a cluster, there may be more than one master node that distribute the load among themselves or serve to provide the fault tolerance.

Kubelet is the process launched on each minion node and designed to manage it, control its load and operability.

Working with containerized applications in K8s is provided through a set of abstractions:

- The pod is an abstraction that includes one or more containers and shared resources. Serves to start and manage containers in the cluster, as well as provide fault tolerance;

- The controller is the Kubernetes control process. A particular case of the controller is the Replication Controller, which coordinates the replication of pods;

- A service is a logical group consisting of one or more pods and access policies. They are mainly used to provide external access to hosted applications;

- Volume is the shared storage resource. Kubernetes supports a wide range of storage kinds – local disks, NAS, cloud storages, virtual volumes, etc .;

- Operator. These help to manage stateful-applications within the cluster; In addition, they isolate the granularity of these processes from the basic K8s control processes;

- The label is the universal resource locator in the Kubernetes cluster. Allows addressing the cluster objects uniquely.

In fact, there are a lot more abstractions in the ecosystem of K8s (however, those we provided above are enough to form the idea of the project’s essence). Together, they contribute to more convenient automation of processes associated with deployment, load balancing and coordinating the application threads.

Principles of Operation

The “selling feature” of Kubernetes is that all the resources needed to run the application are dynamically allocated between the pods over which it runs.

A working Kubernetes cluster includes local agents running on nodes (Kubelets), control components (server APIs, schedulers, etc.), and distributed storages. In this case, the containers are encapsulated in the pods and each of them is assigned its own local IP-address (through which, in fact, the access to the containers is provided).

Kubelet, in turn, manages pods and inherent containers, images, partitions, etc. With its help, installation and launch of application containers within the pods are performed and the correctness of the container operation is checked. If Kubelet notices an invalid node state, it tries to restart it (the state is checked every few seconds). When the master receives a message that the node is invalid, the replication controller (a kind of process supervisor, which can manage multiple threads on multiple nodes at once) restarts the pods on one of the correctly working nodes that have free resources. This ensures the fault tolerance of the system.

How is the Relationship between Kubernetes and Docker Organized?

And now – a few words about how the interaction between Kubernetes and Docker is organized.

As you may already know, Docker is a universal solution for launching and managing containerized software. The main slogan of Docker is “Build, Ship, and Run any App, Anywhere”. Docker can run and manage a number of container formats, the most used being libcontainer and LXD.

Kubernetes and Docker work together perfectly (although it is worth reminding that Kubernetes can also use other container managers). K8s serves to organize and automate the horizontal scaling and maintain the cluster’s performance. If we talk about establishing a relationship, it is carried out as follows.

When Kubernetes starts a pod on a particular node, Docker is prompted to run the appropriate containers. Then, using Kubelet, the status of the launched containers is fixed and the received data is transmitted by the master. Finally, Docker “binds” the containers to a certain node, in order to further regulate the activation and stopping of the processes.

Another notable feature in K8s is that at every singular node, several pods can be launched simultaneously, each containing several containers that are separate Docker instances.

Benefits of Using Kubernetes

The Kubernetes project arose in June 2014 when Google decided to make public some of the developments from the internal Borg project. Now, this technology is actively used in almost all Google projects based on cloud computing. Noteworthy is that K8s covers all the aspects of the software deployment procedures – from server images to telemetry, security software and hardware. If you need to scale, you can increase the number of pods only for individual services, without affecting the entire infrastructure of the application.

To date, developers have a choice between three systems for deploying container clusters: Kubernetes, Mesosphere and Docker Swarm. Between themselves, they differ in the richness of the functional and the tasks they were designed to solve.

In particular, using Docker Swarm, several machines can be joined into a unified virtualized environment for launching containers. Mesosphere in its turn appeared before the concept of containers was formed completely. It is for this reason that this project is not so well adapted to working with them. As for Kubernetes, this solution allows not only to combine the computing power of several hosts but also to provide the load balancing, performance monitoring and container app updating (all this is implemented using the rolling-update function, which allows updating pods without any downtime).

Thus, deploying Kubernetes is definitely worth it in cases when you need to:

- Provide the fastest possible and least costly horizontal scalability by distributing Docker containers over multiple hosts;

- Reduce the need for new hardware resources when scaling;

- Provide the comprehensive control and automation in the administration processes;

- Introduce algorithms for replication, scaling, self-recovery, and resumption of system operability;

- Provide increased fault tolerance and minimize downtime by launching containers on different machines.

Summary

Given that Kubernetes is a relatively new project, it is not as common as its alternatives – Docker Swarm and Mesosphere. However, it is worth noting that Kubernetes was chosen by such renowned companies as SAP, Concur Technologies, IBM, Red Hat, Rackspace, etc. In addition, three giants of cloud computing – Google, Microsoft, and Amazon, have already implemented Kubernetes integration modules into their commercial cloud platforms. Wouldn’t you say that this is the success?

We suggest our customers deploy their containerized applications on the basis of K8s. With it, you will first and foremost see how fast and budgetary can automated and fault-tolerant horizontal scaling be. Do you have an idea for the project? Contact us today to discuss all the features, fine details, and possible ways to implement your ideas.